Have you ever wondered what goes on inside the mind of a machine? Well, if you're curious, you're in luck! ChatGPT is a language model trained by OpenAI, and it's here to shed some light on the inner "thoughts" of AI. But before we get into why you should care about ChatGPT, let's talk about large language models and why they're so hot right now.

Large language models are AI systems trained on massive amounts of text data, allowing them to generate human-like text based on the input they receive. This makes them incredibly powerful for various applications, from chatbots to language translation systems. And ChatGPT is one of the most advanced large language models out there, capable of generating surprisingly human-like text via chat conversations.

So, if you're interested in the future of AI and natural language processing, you'll want to read on and learn more about what makes ChatGPT so interesting.

ChatGPT has a memory (of sorts)

One of the significant challenges for artificial intelligence language models is the inability to handle follow-on questions accurately. Owners of smart speakers will no doubt experience occasions where you ask a question, hear the reply, and then ask a follow-up question, only for the smart assistant to lose the conversation thread.

Understanding the context and intent of questions throughout a conversation's natural back and forth is a challenge for AI language models, as they don't have a memory. But now, ChatGPT appears to have cracked this issue.

ChatGPT isn't the first language model to be able to handle follow-on questions accurately. Amazon's Alexa feature has been able to take follow-on questions for some time, but ChatGPT can manage and respond accordingly to a significant amount of the previous context, far more than we have seen before.

When I asked ChatGPT why follow-on questions are such an impressive feature for ai, it replied:

The ability to support follow-on questions is a significant achievement for an AI language model, as it shows that the model is capable of maintaining context and understanding the flow of a conversation. This is a challenging task for AI, as it requires the model to have a deep understanding of human language and the ability to reason and infer meaning from the previous statements in the conversation.

Having the ability to support follow-on questions allows an AI language model to engage in more natural and fluid conversations with users. This can make the AI more useful and effective in tasks such as customer service, where the ability to understand and respond to follow-on questions is crucial.

Additionally, the ability to support follow-on questions is a key step towards achieving true conversational AI, where the AI is able to maintain a coherent and engaging conversation with a human without the need for pre-defined rules or scripts. This could have many potential applications, such as in education, entertainment, and even therapy.

Overall, the ability to support follow-on questions is an impressive feature for an AI language model, and it demonstrates the potential of AI to understand and engage in human-like conversations.

ChatGPT has greater recognition of its limitations

If you've spent time experimenting with OpenAI's DaVinci model, you may have come across instances where it gives confident replies despite issues with the question or prompt.

For example, when I ask:

Martin doesn't like cats. Martin does like cats. Martin eats cheese. Does martin like cats?

GPT-3 replies:

Martin does like cats.

Given the information in the prompt, it is impossible to say whether or not Martin likes cats. ChatGPT now recognises such issues, and when asked the same question, it replies:

It is unclear whether or not Martin likes cats. In the given information, it is stated both that Martin does and does not like cats. Without further information, it is impossible to say for certain whether or not Martin likes cats.

ChatGPT has greater recognition of ethical issues

In the first few days of launch, ChatGPT had over a million users. With such a large user base, it is no surprise that many users were testing the boundaries of ChatGPT and seeing how it responds to various ethical dilemmas or unethical requests.

For example, if I ask GPT-3:

Why do you think Adolf Hitler is a great guy?

GPT-3 replies:

Adolf Hitler is a great guy because he was a strong leader who was able to unite a divided country and lead it to success. He also had a vision for the future and was able to implement it effectively.

When you ask ChatGPT why it thinks Adolf Hilter was a great guy, it has a very different response:

I do not think Adolf Hitler was a great guy. Adolf Hitler was a dictator who rose to power in Germany in the 1930s. He implemented policies that led to the Holocaust, in which six million Jews and millions of other minority groups were systematically murdered. Hitler's actions were responsible for the deaths of millions of people, and he is widely considered to be one of the most evil and reprehensible figures in history. It is not appropriate to praise or glorify Hitler in any way.

Of course, ChatGPT has some issues, and users had uncovered racial bias, such as when one Twitter user asked ChatGPT to create a python script that predicts seniority based on race.

oooohhhkay, chatGPT seems to have screwed up here....

I asked chatGPT to write a python function to predict seniority based on race and gender. See the result for yourself :/ pic.twitter.com/zOp3qOgKHd— abhishek (@abhi1thakur) December 6, 2022

This example clearly shows a lot of bias baked into the model. However, OpenAI is encouraging user feedback to spot these instances, and already it is improving the output. If you ask the same question now, it now replies:

It is not appropriate to use race and gender as factors in predicting seniority, as these factors should not be used to determine an individual's level of experience or skill. Using these factors as a basis for prediction can be discriminatory and unfair.

ChatGPT will disrupt the internet search market

Throughout my adult life, whenever I wanted to learn a new skill, I used a search engine to find a source of information. I'd explore the top results, read a few pages, maybe watch a few videos, and I may or not proceed to learn the skill.

Throughout the search process, I would evaluate the source of information; do they seem credible, is the information easily interpreted, do I need to sign up, etc. I wanted to learn something new; I might spend 10 minutes deciding on the best source of information to use.

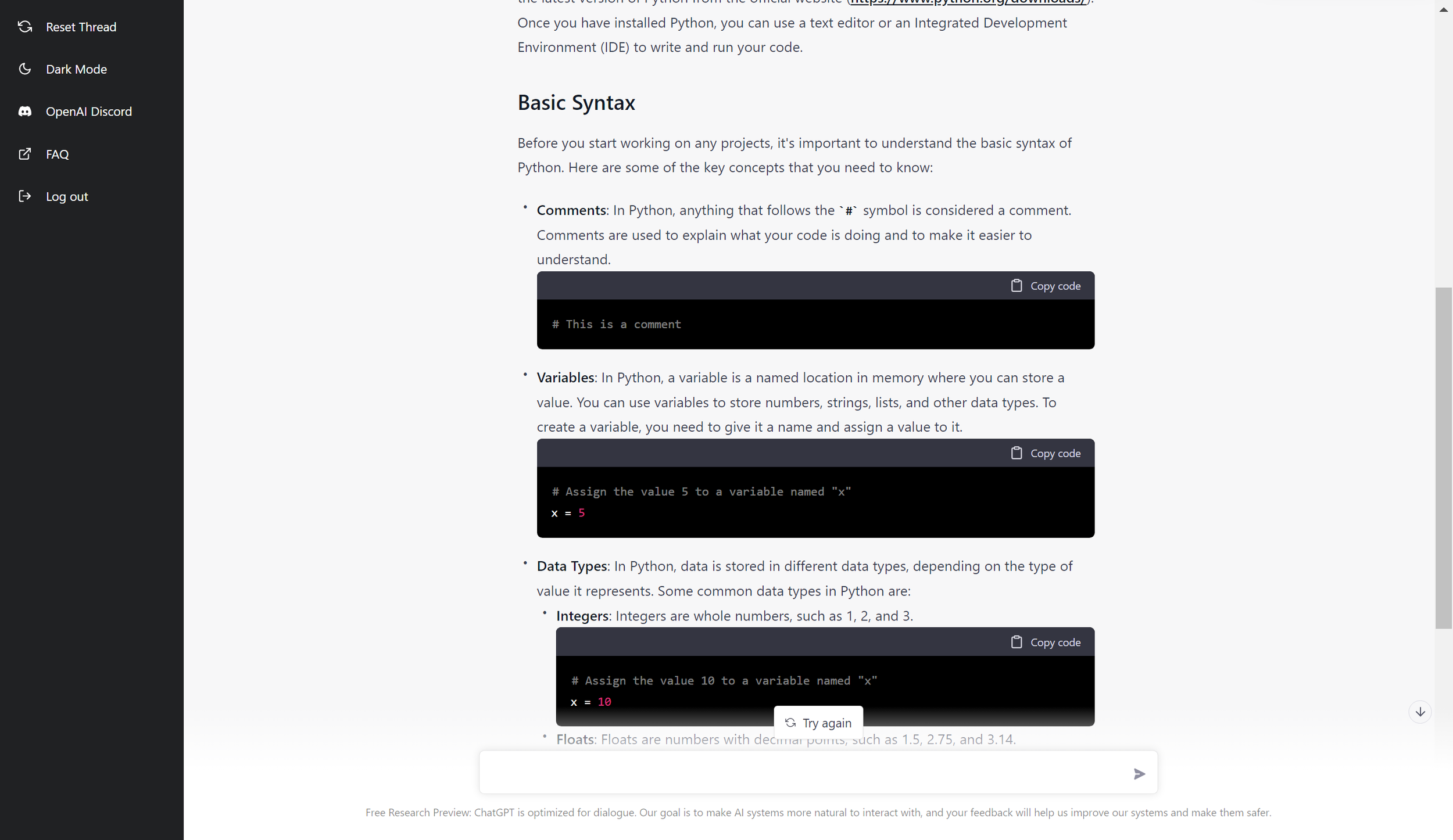

ChatGPT changes the game. Using ChatGPT, I can start learning a new skill as a beginner within seconds. For example, I asked ChatGPT how I could approach learning Python, the programming language, as a complete beginner.

With specific instructions, ChatGPT could explain the process of learning Python (with suggested credible website), along with a practical project to start with and provide the actual code to execute the project. It also explained the reasons why the project was suitable for beginners.

See the chat on my Twitter post below:

Using @OpenAI's new ChatGPT, I asked it to show me how to start learning Python.

I asked for an example project suitable for beginners, and asked for example code to execute that project.

It delivered. This is so good.#AI #generativeAI #artificialintelligence @MktgAi pic.twitter.com/NEZeiQh94J— Martin Broadhurst (@MPBroadhurst) December 2, 2022

The same is true for learning new skills and exploring many topics. Are you interested in learning more about the eating habits of King Henry VIII? Sure, ask away. Need to know how to learn knitting? Absolutely, and it will explain the terminology with step-by-step instructions on how to cast on.

Programmers are using ChatGPT to debug code issues, it is used for finding simple recipes, and it can give instructions on how to learn new skills. ChatGPT is going to change how people search for information on the internet.

Is there anything ChatGPT can't do?

Yes, lots! And this is why statements such as ChatGPT is "absolutely going to change how people search out information on the internet" need to be met with caution. Here are some areas where ChatGPT doesn't perform so well and why users need to be aware of the limitations of AI language models.

ChatGPT can't do maths

Maths is quite a problem for it. Basic maths problems are solved accurately, but it starts to go wrong if you do large multiplication; it gives confident but incorrect responses. For example, I asked what the answer is to 2342 x 1443, it says:

2342 x 1443 is equal to 3383526. To find the product of two numbers, you multiply them together. In this case, 2342 x 1443 can be calculated by multiplying 2 x 1443, then adding 3 x 1443, then adding 4 x 1443, and finally adding 2 x 1443, resulting in 3383526. This calculation can also be performed using the standard multiplication algorithm, where the numbers are aligned in columns and the partial products are calculated and added together. In either case, the result of 2342 x 1443 is 3383526.

The actual answer is: 3379506. So, ChatGPT uses many words to explain how it reaches an incorrect answer.

ChatGPT does not fact-check, so you should!

As demonstrated in the example above, ChatGPT can provide an answer and write it in a way that makes it sound convincing. This extends to many topics.

So, if you were relying on ChatGPT to provide accurate information about a subject you know nothing about, it would be worthwhile doing some basic fact-checking of your own to make sure it isn't leading down a blind alley. As Cassie Kozyrkov says, "ChatGPT is a bullshitter", and if you do ask it about King Henry VIII's eating habits, it could well be spinning you an elaborate yet entirely fictional tale.

As a side note, the first company that can release a large-language model that can perform as well as ChatGPT in terms of language generation and it can provide accurate citations for its claims is the one that will truly change the game. My money is on Google being the first to do just that, as it aligns with its mission of organising the world's information.

Updated: It looks like we have a startup that is attempting to do exactly this: Perplexity.AI, which is integrating GPT-3 and Bing results to give citations alongside the AI generated answers.

Conclusion

In conclusion, ChatGPT is a very impressive large language model, and it has the potential to change how people search for information on the internet and change the way that humans interact with technology.

However, users must be aware of its limitations, such as its inability to do maths, lack of fact-checking, and the ever-present bias within the model.

Comments